Why evaluation belongs everywhere

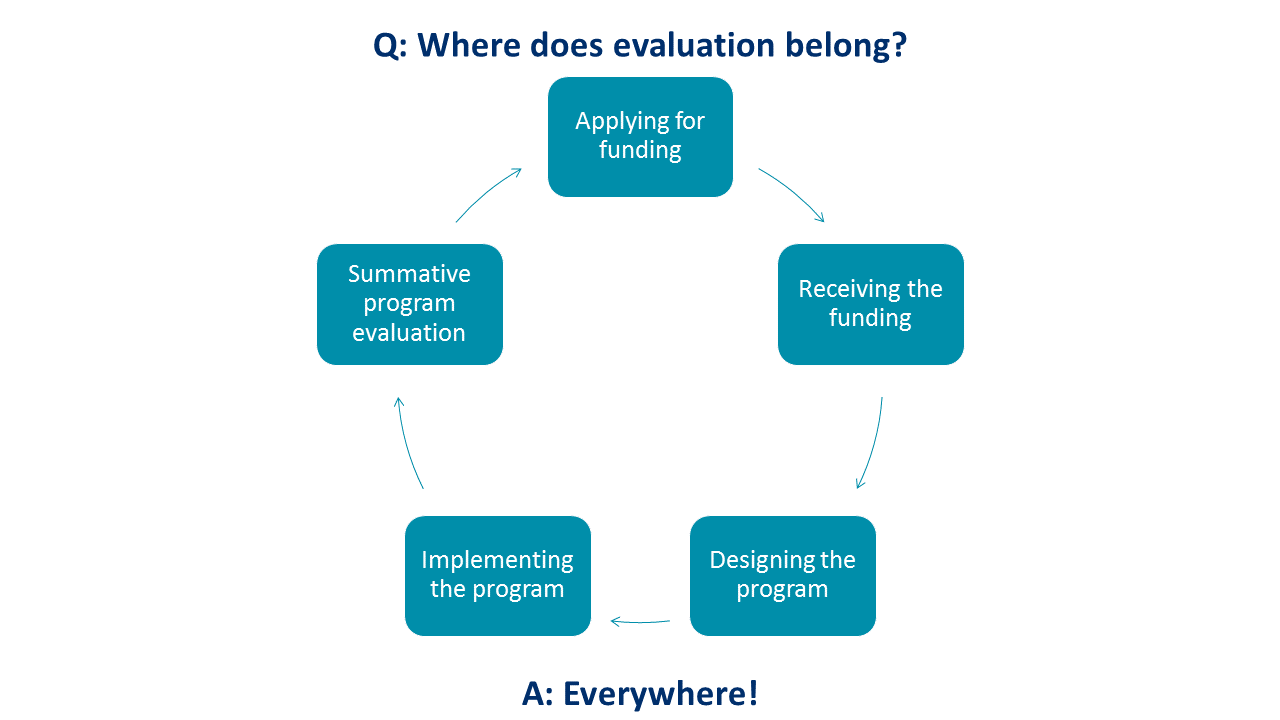

Evaluation is our bread-and-butter here at HARC (check out my previous posts here and here). Unfortunately, a common myth about evaluation is that it happens at the end of a project or program. While this is true, a more accurate statement is that evaluation has its place in all stages of a program cycle, from planning to implementation to summation and back to planning for the next round.

Planning Stage

First and foremost, the planning stage. If you’re a nonprofit, this probably is when you’re writing a grant or making some similar request for funding. If you’re fortunate enough to have the money to put on programs and services on your own… then I’m jealous. I want to be you.

But anyways, at the planning phase, this is when you need to create a strong evaluation plan. I’ve found that creating the evaluation plan can actually help fill in gaps in the program design and strengthen the overall program. It’s also when you make a budget for evaluation, because we all know, if something’s not in the budget from the outset, it’s really hard to shoe-horn it in later. FYI, about 10% of the overall program budget is a good rough estimate for what to spend on evaluation. This can vary widely (more if it’s research-intensive, less if it’s a huge grant, etc.), but it’s nice to know the ballpark.

At the Beginning of the Program

Once you get the money (hooray!) and you’re getting ready to get started on your program, you need to think about when and where evaluation should be done, because the answer might very well be, “right now”. For example, let’s say your program strives to increase self-confidence and leadership skills for lesbian, gay, bisexual, transgender, and questioning (LGBTQ) youth, like the awesome camp we helped evaluate recently for our partner Safe Schools Desert Cities.

Because your program goal revolves around change (increase or decrease), you need to do a pre-test and a post-test. You cannot demonstrate change at the end of the project if you don’t know where you started at.

[Fortunately, SSDC did a pre-test and a post-test, so they were able to measure their impact their wonderful camp had on these teens, and it was pretty incredible.]

I have a recurring nightmare where an imaginary client comes to me and says something like, “I have a really comprehensive program and my goal was to increase [insert-awesome-measure-of-success] by 50%, and I’ve been running it for several years. Can you evaluate whether I met my goal?” Cringe. And then I wake up screaming, “Pre-test! You need a pre-test!!”

[Side note: If you just read my nightmare scenario and thought, “Oh, shoot, that’s exactly what I was gonna say!”, the answer is yes, evaluation can still be done to show that your program made a difference… but it’s probably not going to tell you if you increased your target measure by 50%. After-the-fact evaluation CAN happen, it’s just not as good. But that’s for another post.]

During the Program

Once the program is in place, you can do some on-the-ground, quick-and-dirty evaluation. When I was doing evaluation in the museum/informal science education/National Science Foundation world, we called this “remedial evaluation”, as in “figure out if you’re doing something wrong and then fix it quickly so that your final evaluation will be able to show awesomeness”. But nowadays I’ve heard people object to the term “remedial” because it has negative connotations. I guess “remedial” = “failure” in many people’s minds. To me, it’s a chance to find out where you could be better. Because I promise you, the chances that your program is perfect are relatively slim. Almost every single program out there could get better in some way, and remedial evaluation can help you figure that out quickly (not at the end of the year, or the end of the five-year project, or some other time in the distant future where you can’t do anything about it). So anyways, remedial evaluation isn’t always pretty, it’s not always methodologically sound, and the “reports” are often just a conversation or quick email about what should change. But it’s still incredibly useful.

At the End of the Program

Evaluation happens at the end of the program, too, as we all know. This is often called “summative evaluation”, as in “sum up what you did and what difference it made”. This is where the better methods come into play, the extensive reports with pretty charts and graphs, and the overall conclusions of how you changed people’s lives with your actions.

The fun part about this last bit is that it totally feeds back into the cycle. Now that you have proof of your awesomeness and how your program changes lives/makes unicorns real/brings about world peace, you can go do it again. Funders want to fund successful programs, and with your snazzy evaluation report that says, “We’re successful!” the chances of you getting funding to do it again—bigger and better this time!—skyrocket. And then you start all over again with planning your next project—including the evaluation that goes in it.